Docker is designed to make it easier to create, deploy, and run applications by using containers.

Containers allow a developer to package up an application with all of the parts it needs, such as libraries and other dependencies, and ship it all out as one package.

The developer can rest assured that the application will run on any other Linux machine regardless of any customized settings that machine might have that could differ from the machine used for writing and testing the code.

Docker is a bit like a virtual machine. But unlike a virtual machine, rather than creating a whole virtual operating system, Docker allows applications to use the same Linux kernel as the system that they’re running on and only requires applications be shipped with things not already running on the host computer.

And importantly, Docker is open source.

This means that anyone can contribute to Docker and extend it to meet their own needs if they need additional features that aren’t available out of the box.

Docker is a tool that is designed to benefit both developers and system administrators, making it a part of many DevOps (developers + operations) toolchains.

For developers, it means that they can focus on writing code without worrying about the system that it will ultimately be running on.

It also allows them to get a head start by using one of thousands of programs already designed to run in a Docker container as a part of their application. For operations staff, Docker gives flexibility and potentially reduces the number of systems needed because of its small footprint and lower overhead.

Getting started

Here are some resources that will help you get started using Docker in your workflow. Docker provides a web-based tutorial with a command-line simulator that you can try out basic Docker commands with and begin to understand how it works. There is also a beginners guide to Docker that introduces you to some basic commands and container terminology. Or watch the video below for a more in-depth look:

The need for containers

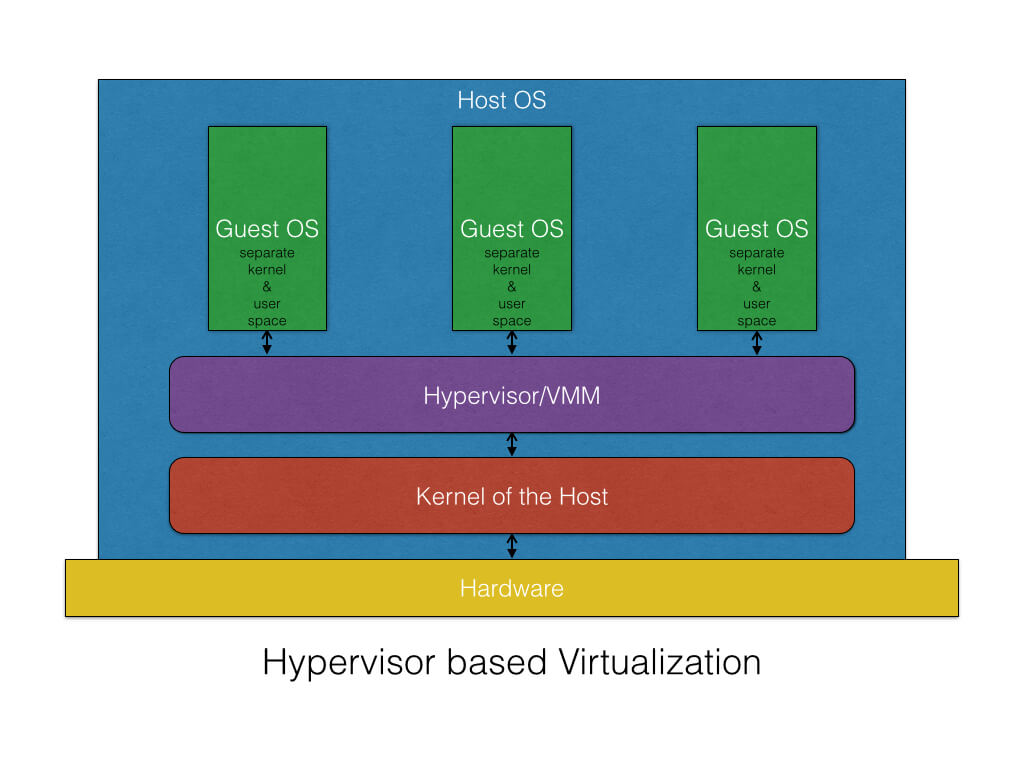

Hypervisor based virtualization technologies have existed for a long time now.

Since a hypervisor or para virtualization mechanism, you can run any operating, Windows on Linux, or the other way around.

Both the guest and the host operating system run with their own kernel and the communication of the guest system with the actual hardware is done through an abstracted layer (or not) of the hypervisor.

This approach usually provides a high level of isolation and security as all communication between the guest and host is normally through the hypervisor, (or not, depending on technology).

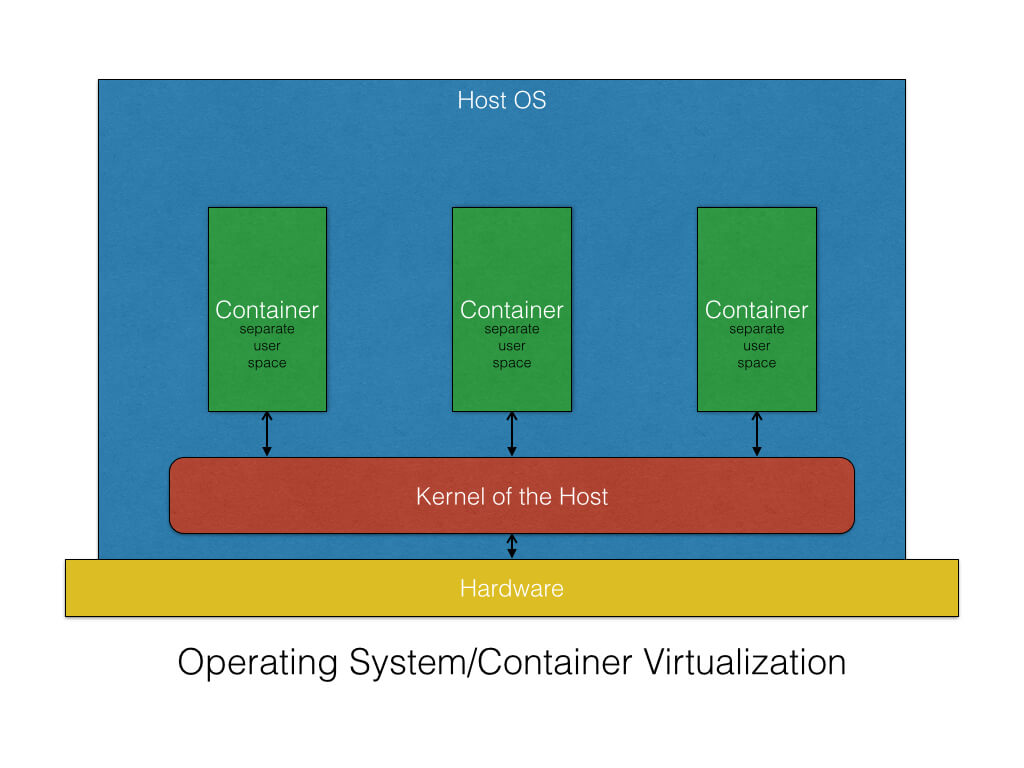

Virtualisation as a “cost”, this cost is the overhead, between 1o to 20% , how reduce this overhead, another level of virtualization called “container virtualization” was introduced which allows running multiple isolated user space instances on the same kernel.

What are containers?

They provide a lightweight virtual environment that groups and isolates a set of processes and resources such as memory, CPU, disk, etc., from the host and any other containers. The isolation guarantees that any processes inside the container cannot see any processes or resources outside the container.

The difference between a container and a full-fledged VM is that all containers share the same kernel of the host system.

This gives them the advantage of being very fast with almost 0 performance overhead compared with VMs.

They also utilize the different computing resources better because of the shared kernel. However, like everything else, sharing the kernel also has its set of shortcomings.

- Type of containers that can be installed on the host should work with the kernel of the host. Hence, you cannot install a Windows container on a Linux host or vice-versa.

- Isolation and security — the isolation between the host and the container is not as strong as hypervisor-based virtualization since all containers share the same kernel of the host and there have been cases in the past where a process in the container has managed to escape into the kernel space of the host.

Common cases where containers can be used

- Containers are being used for two major uses – as a usual operating system or as an application packaging mechanism. There are also other cases like using containers as routers but I don’t want to get into those in this blog.

- Containers are classified as OS containers and application containers.

OS containers

- OS containers are virtual environments that share the kernel of the host operating system but provide user space isolation.

- For all practical purposes, you can think of OS containers as VMs. You can install, configure and run different applications, libraries, etc., just as you would on any OS. Just as a VM, anything running inside a container can only see resources that have been assigned to that container.

- OS containers are useful when you want to run a fleet of identical or different flavors of distros. Most of the times containers are created from templates or images that determine the structure and contents of the container. It thus allows you to create containers that have identical environments with the same package versions and configurations across all containers.

Application containers

- While OS containers are designed to run multiple processes and services, application containers are designed to package and run a single service.

- Container technologies like Docker and Rocket are examples of application containers.

- So even though they share the same kernel of the host there are subtle differences make them different, which I would like to talk about using the example of a Docker container:

Run a single service as a container

- When a Docker container is launched, it runs a single process. This process is usually the one that runs your application when you create containers per application. This very different from the traditional OS containers where you have multiple services running on the same OS.

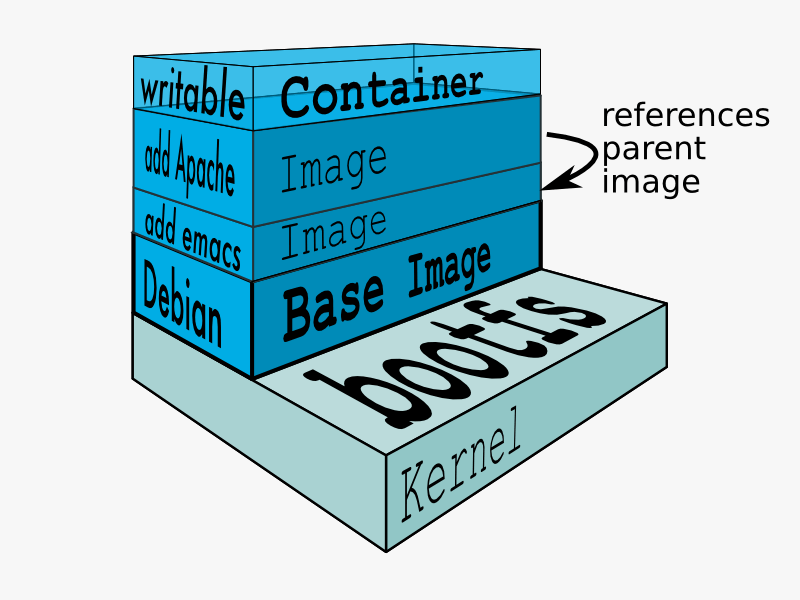

Layers of containers

- Any

RUNcommands you specify in the Dockerfile creates a new layer for the container. - In the end when you run your container, Docker combines these layers and runs your containers.

- Layering helps Docker to reduce duplication and increases the re-use. This is very helpful when you want to create different containers for your components. You can start with a base image that is common for all the components and then just add layers that are specific to your component. Layering also helps when you want to rollback your changes as you can simply switch to the old layers, and there is almost no overhead involved in doing so.

Built on top of other container technologies

- Until some time ago, Docker was built on top of LXC. If you look at the Docker FAQ, they mention a number of points which point out the differences between LXC and Docker.

- The idea behind application containers is that you create different containers for each of the components in your application. This approach works especially well when you want to deploy a distributed, multi-component system using the microservices architecture. The development team gets the freedom to package their own applications as a single deployable container. The operations teams get the freedom of deploying the container on the operating system of their choice as well as the ability to scale both horizontally and vertically the different applications. The end state is a system that has different applications and services each running as a container that then talk to each other using the APIs and protocols that each of them supports.

- In order to explain what it means to run an app container using Docker, let’s take a simple example of a three-tier architecture in web development which has a

PostgreSQLdata tier, aNode.jsapplication tier and anNginxas the load balancer tier. - In the simplest cases, using the traditional approach, one would put the database, the Node.js app and Nginx on the same machine.

Deploying this architecture as Docker containers would involve building a container image for each of the tiers. You then deploy these images independently, creating containers of varying sizes and capacity according to your needs.

Summary

So in general when you want to package and distribute your application as components, application containers serve as a good resort. Whereas, if you just want an operating system in which you can install different libraries, languages, databases, etc., OS containers are better suited.

sources:@here

sources:@here

Leave a Comment