Overview of Amazon Web Service Architecture:

AWS is made of regions which are grouping of independently separated data center in a specific geographical region.

What are the availability zones ?

- Data center are close to other to permit low latency to reduce impact fail-over and increase fault tolerance capacity.

What is an edge location ?

- Edge location are Data center that contain only certain AWS services, as an example CloudFront (CDN)

- An edge location is where end users access services located at AWS. They are located in most of the major cities around the world and are specifically used by CloudFront (CDN) to distribute content to end user to reduce latency. It is like frontend for the service we access which are located in AWS cloud.

Definition of Scalability:

Is the ability for a system to expand and contract according to workload demand.

The system as to be:

- Resilient

- Operationally efficient

- Cost effective as the service grow

Definition of Fault Tolerant:

- Ability for the system to operate without interruption in case of service failure

- What are the AWS technology designed to respond to Scalabilty and Fault tolerance:

- Auto-Scaling

- Route 53

- Availability Zones

- Multiple Region

What is Amazon EC2, Elastic compute Cloud ?

Amazon Elastic Compute Cloud (Amazon EC2) provides scalable computing capacity in the Amazon Web Services (AWS) cloud. Using Amazon EC2 eliminates your need to invest in hardware up front, so you can develop and deploy applications faster. You can use Amazon EC2 to launch as many or as few virtual servers as you need, configure security and networking, and manage storage. Amazon EC2 enables you to scale up or down to handle changes in requirements or spikes in popularity, reducing your need to forecast traffic.

Definition of Cloud instances

A “cloud instance” refers to a virtual server instance from a public or private cloud network. In cloud instance computing, single hardware is implemented into software and run on top of multiple computers. Cloud instance computing is highly dynamic, enabling users not to worry about how many servers can fit on a single hardware application without causing major slowdowns during peak hours. If performance maxes out, you can simply add more computers. Resources can be freely allocated to and from other computers by the software, enabling maximum utilization and helping to prevent crashes. If the server grows beyond the limits of a single machine, cloud instance computing allows the cloud software to be easily expanded to span multiple machines, whether temporarily or permanently. Cloud instance computing also reduces the downtime associated with servicing hardware. A server in the cloud can be easily moved from one physical machine to another without going down. The abstraction associated with the cloud allows hardware to seamlessly transfer all data from one point to another without the end-user having any idea that it happened. In sum, cloud instance computing is highly dynamic, can reassign resources as needed, and allows for the movement of servers as they run in the cloud.

What are the AWS Cloud Instance type ?

- Amazon EC2 Reserved Instances provide a significant discount (up to 75%) compared to On-Demand pricing and provide a capacity reservation when used in a specific Availability Zone.

- With On-Demand instances you only pay for EC2 instances you use. The use of On-Demand instances frees you from the costs and complexities of planning, purchasing, and maintaining hardware and transforms what are commonly large fixed costs into much smaller variable costs.

- Allow you to bid on spare Amazon EC2 computing capacity.

- Since Spot instances are often available at a discount compared to On-Demand pricing, you can significantly reduce the cost of running your applications, grow your application’s compute capacity and throughput for the same budget, and enable new types of cloud computing applications.

Auto-Scaling increase the number of instance on-demand based on certain metrics, auto scaling can scale up on instance to meet the demand.

Amazon EC2 provides a wide selection of instance types optimized to fit different use cases.

Instance types comprise varying combinations of CPU, memory, storage, and networking capacity and give you the flexibility to choose the appropriate mix of resources for your applications. Each instance type includes one or more instance sizes, allowing you to scale your resources to the requirements of your target workload.

T2 instances are Burstable Performance Instances.

M4 instances are the latest generation of General Purpose Instances.

M3 instance types and provides a balance of compute, memory, and network resource.

C4 instances are the latest generation of Compute-optimized instances.

C3 instances is a high performance front-end fleets, web-servers, batch processing, distributed analytics, high performance science and engineering applications, ad serving, MMO gaming, and video-encoding.

X1 Instances are optimized for large-scale, enterprise-class, in-memory applications and have the lowest price per GiB of Memory.

R3 instances are optimized for memory-intensive applications and offer lower price per GiB of RAM.

R4 instances are optimized for memory-intensive applications and offer better price per GiB of RAM than R3.

P2 instances are intended for general-purpose GPU compute applications.

G2 instances are optimized for graphics-intensive applications.

F1 instances offer customizable hardware acceleration with field programmable arrays (FPGAs).

I2 Instances high I/O Instances includes the High Storage Instances that provide very fast SSD-backed instance storage optimized for very high random I/O performance, and provide high IOPS at a low cost.

D2 instances feature up to 48 TB of HDD-based local storage, deliver high disk throughput.

What is Amazon Virtual Private Cloud (VPC)

- Amazon Virtual Private Cloud (Amazon VPC) lets you provision a logically isolated section of the Amazon Web Services (AWS) cloud where you can launch AWS resources in a virtual network that you define. You have complete control over your virtual networking environment, including selection of your own IP address range, creation of subnets, and configuration of route tables and network gateways. You can use both IPv4 and IPv6 in your VPC for secure and easy access to resources and applications.

- You can easily customize the network configuration for your Amazon Virtual Private Cloud. For example, you can create a public-facing subnet for your webservers that has access to the Internet, and place your backend systems such as databases or application servers in a private-facing subnet with no Internet access. You can leverage multiple layers of security, including security groups and network access control lists, to help control access to Amazon EC2 instances in each subnet.

- Additionally, you can create a Hardware Virtual Private Network (VPN) connection between your corporate datacenter and your VPC and leverage the AWS cloud as an extension of your corporate datacenter

What is Elastic Load Balancers (ELB) ?

- Load balancing is a common method for distributing traffic among servers, the ELB can send traffic to different instances in different availability zones.

What is Route 53 ?

- Is a domain management name service (DNS) , Route 53 will host the internal and external DNS for your application environment.

- It is commonly used with ELB to direct traffic from the domain to ELB.

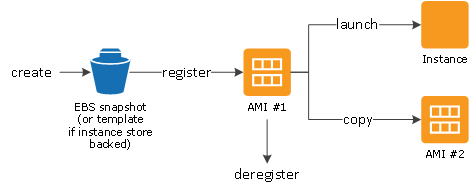

What is AMI Amazon Machine Image ?

- Is a template that contain a pre built software configuration. AMI is used with Auto-Scaling and Disaster Recovery .

AWS Cloud Storage solution Type

AWS offers a complete range of cloud storage services to support both application and archival compliance requirements. Select from object, file, and block storage services as well as cloud data migration options to start designing the foundation of your cloud IT environment.

What are Instance Store Backed Instance (Ephemeral storage)?

- Is a block level temporary storage that live over the life of the running instance.

- Live as long as your instance is not turned off.

What is AWS EBS Elastic Block Store?

- Amazon Elastic Block Store (Amazon EBS) provides block level storage volumes for use with EC2 instances. EBS volumes are highly available and reliable storage volumes that can be attached to any running instance that is in the same Availability Zone. EBS volumes that are attached to an EC2 instance are exposed as storage volumes that persist independently from the life of the instance.

- You can create EBS General Purpose SSD (

gp2), Provisioned IOPS SSD (io1), Throughput Optimized HDD (st1), and Cold HDD (sc1) volumes up to 16 TiB in size. - Nas Block Storage based.

- Easy to backup with snapshots stand on AWS S3.

- Can provision additional IOPS to help wit I/O or even use an EBS optimize instance to help network traffic between the instance and the EBS volume.

What is Amazon Simple Storage Service (Amazon S3) ?

- Simple Storage Service (S3) is an object Storage service from AWS, with a simple web service interface to store and retrieve any amount of data from anywhere on the web. It is designed to deliver 99.999999999% durability, and scale past trillions of objects worldwide.

- Simple to use with a web-based management console and mobile app. S3 also provides full REST APIs and SDK.

- S3 provides durable infrastructure to store important data and is designed for durability of 99.999999999% of objects.

- S3 supports data transfer over SSL and automatic encryption of your data once it is uploaded.

- Amazon S3 Standard is designed for up to 99.99% availability of objects over a given year and is backed by the Amazon S3 Service Level Agreement.

What is Amazon Elastic File System (Amazon EFS)?

- Amazon Elastic File System (Amazon EFS) provides simple, scalable file storage for use with Amazon EC2 instances in the AWS Cloud. Amazon EFS is easy to use and offers a simple interface that allows you to create and configure file systems quickly and easily. With Amazon EFS, storage capacity is elastic, growing and shrinking automatically as you add and remove files, so your applications have the storage they need, when they need it.

What is Amazon Glacier ?

- Amazon Glacier is a secure, durable, and extremely low-cost cloud storage service for data archiving and long-term backup. Customers can reliably store large or small amounts of data for as little as $0.004 per gigabyte per month, a significant savings compared to on-premises solutions. To keep costs low yet suitable for varying retrieval needs, Amazon Glacier provides three options for access to archives, from a few minutes to several hours.

What is Amazon Storage Gateway ?

- Permit to connect local datacenter software appliance to Cloud based storage such as amazon S3, using Amazon import/export feature.

- The AWS Storage Gateway service seamlessly enables hybrid storage between on-premises storage environments and the AWS Cloud. It combines a multi-protocol storage appliance with highly efficient network connectivity to Amazon cloud storage services, delivering local performance with virtually unlimited scale. Customers use it in remote offices and datacenters for hybrid cloud workloads, backup and restore, archive, disaster recovery, and tiered storage.

- The Storage Gateway virtual appliance connects seamlessly to your local infrastructure as a file server, as a volume, or as a virtual tape library (VTL). This seamless connection makes it simple for organizations to augment existing on-premises storage investments with the high scalability, extreme durability and low cost of cloud storage.

The daunting realities of data transport apply to most projects. How do you gracefully move from your current location to your new cloud, with minimal disruption, cost and time? What is the smartest way to actually move your GB, TB or PB of data?

Cloud Data Migration Challenge

- The realities of data transport apply to most projects. How do you gracefully move from your current location to your new cloud, with minimal disruption, cost and time? What is the smartest way to actually move your GB, TB or PB of data?

How much data can move how far how fast? For a best case scenario use this formula:Number of Days = (Total Bytes)/(Megabits per second * 125 * 1000 * Network Utilization * 60 seconds * 60 minutes * 24 hours)if you have a T1 connection (1.544Mbps) and 1TB (1024 * 1024 * 1024 * 1024 bytes) to move in or out of AWS the theoretical minimum time it would take to load over your network connection at 80% network utilization is 82 days.

AWS Database Solution type

What Is Amazon Relational Database Service (Amazon RDS) ?

- Relational Database, a fully managed DB service

- Each DB instance runs a DB engine.

- Amazon RDS currently supports the MySQL, MariaDB, PostgreSQL, Oracle, and Microsoft SQL Server DB engines.

- Each DB engine has its own supported features, and each version of a DB engine may include specific features. Additionally, each DB engine has a set of parameters in a DB parameter group that control the behavior of the databases that it manages.

What is Aurora on Amazon RDS ?

- Amazon Aurora is a fully managed, MySQL-compatible, relational database engine that combines the speed and reliability of high-end commercial databases with the simplicity and cost-effectiveness of open-source databases.

- Amazon Aurora is a drop-in replacement for MySQL and makes it simple and cost-effective to set up, operate, and scale your new and existing MySQL deployments, thus freeing you to focus on your business and applications.

- Amazon RDS provides administration for Amazon Aurora by handling routine database tasks such as provisioning, patching, backup, recovery, failure detection, and repair. Amazon RDS also provides push-button migration tools to convert your existing Amazon RDS for MySQL applications to Amazon Aurora.

What is Amazon DynamoDB ?

- Amazon DynamoDB is a fully managed NoSQL database service that provides fast and predictable performance with seamless scalability. You can use Amazon DynamoDB to create a database table that can store and retrieve any amount of data, and serve any level of request traffic. Amazon DynamoDB automatically spreads the data and traffic for the table over a sufficient number of servers to handle the request capacity specified by the customer and the amount of data stored, while maintaining consistent and fast performance.

What is Amazon ElastiCache ?

- Is a web service that makes it easy to deploy, operate, and scale an in-memory data store or cache in the cloud.

- The service improves the performance of web applications by allowing you to retrieve information from fast, managed, in-memory data stores, instead of relying entirely on slower disk-based databases.

Amazon ElastiCache supports two open-source in-memory engines:

- Redis – a fast, open source, in-memory data store and cache. Amazon ElastiCache for Redis is a Redis-compatible in-memory service that delivers the ease-of-use and power of Redis along with the availability, reliability and performance suitable for the most demanding applications. Both single-node and up to 15-shard clusters are available, enabling scalability to up to 3.55 TiB of in-memory data. ElastiCache for Redis is fully managed, scalable, and secure – making it an ideal candidate to power high-performance use cases such as Web, Mobile Apps, Gaming, Ad-Tech, and IoT.

- Memcached – a widely adopted memory object caching system. ElastiCache is protocol compliant with Memcached, so popular tools that you use today with existing Memcached environments will work seamlessly with the service.

What is Amazon CloudWatch ?

- Is a monitoring service for AWS cloud resources and the applications .

- Amazon CloudWatch collect and track metrics, collect and monitor log files, set alarms, and automatically react to changes in your AWS resources.

- Amazon CloudWatch monitor AWS resources such as Amazon EC2 instances, Amazon DynamoDB tables, and Amazon RDS DB instances, as well as custom metrics generated by your applications and services, and any log files your applications generate.

What is Amazon EMR Elastic Map Reduce ?

- Provides a managed Hadoop framework that makes it easy, fast, and cost-effective to process vast amounts of data across dynamically scalable Amazon EC2 instances. You can also run other popular distributed frameworks such as Apache Spark, HBase, Presto, and Flink in Amazon EMR, and interact with data in other AWS data stores such as Amazon S3 and Amazon DynamoDB.

- Amazon EMR securely and reliably handles a broad set of big data use cases, including log analysis, web indexing, data transformations (ETL), machine learning, financial analysis, scientific simulation, and bioinformatics.

What is Amazon Simple Workflow Service (SWF) ?

- Amazon SWF helps developers build, run, and scale background jobs that have parallel or sequential steps. You can think of Amazon SWF as a fully-managed state tracker and task coordinator in the Cloud.

- If your app’s steps take more than 500 milliseconds to complete, you need to track the state of processing, and you need to recover or retry if a task fails, Amazon SWF can help you.

What is AWS Elastic Beanstalk ?

- AWS Elastic Beanstalk is an easy-to-use service for deploying and scaling web applications and services developed with Java, .NET, PHP, Node.js, Python, Ruby, Go, and Docker on familiar servers such as Apache, Nginx, Passenger, and IIS.

- You can simply upload your code and Elastic Beanstalk automatically handles the deployment, from capacity provisioning, load balancing, auto-scaling to application health monitoring. At the same time, you retain full control over the AWS resources powering your application and can access the underlying resources at any time.

- There is no additional charge for Elastic Beanstalk – you pay only for the AWS resources needed to store and run your applications.

What is AWS CloudFormation ?

- AWS CloudFormation gives developers and systems administrators an easy way to create and manage a collection of related AWS resources, provisioning and updating them in an orderly and predictable fashion.

- AWS CloudFormation’s provide sample templates you’re able to create your own to describe the AWS resources, and any associated dependencies or runtime parameters, required to run your application.

What is AWS Identity and Access Management (IAM) ?

- AWS Identity and Access Management (IAM) is a web service that helps you securely control access to AWS resources for your users. You use IAM to control who can use your AWS resources (authentication) and what resources they can use and in what ways (authorization).

What are the security compliance @ AWS

- Amazon Web Services Cloud Compliance enables customers to understand the robust controls in place at AWS to maintain security and data protection in the cloud. As systems are built on top of AWS cloud infrastructure, compliance responsibilities will be shared.

- By tying together governance-focused, audit-friendly service features with applicable security compliance regulations or audit standards, AWS Compliance enablers build on traditional programs; helping customers to establish and operate in an AWS security control environment.

- The EU Data Protection Directive refers to the Directive on the protection of individuals with regard to the processing of personal data and on the free movement of such data (also known as Directive 95/46/EC). Broadly, this Directive sets out a number of data protection requirements, which apply when personal data is being processed.

- The Payment Card Industry Data Security Standard (also known as PCI DSS) is a proprietary information security standard administered by the PCI Security Standards Council, which was founded by American Express, Discover Financial Services, JCB International,MasterCard Worldwide and Visa Inc.

- PCI DSS applies to all entities that store, process or transmit cardholder data (CHD) and/or sensitive authentication data (SAD) including merchants, processors, acquirers, issuers, and service providers. The PCI DSS is mandated by the card brands and administered by the Payment Card Industry Security Standards Council.

- ISO 27001 is a security management standard that specifies security management best practices and comprehensive security controls following the ISO 27002 best practice guidance. The basis of this certification is the development and implementation of a rigorous security program, which includes the development and implementation of an Information Security Management System (ISMS) which defines how AWS perpetually manages security in a holistic, comprehensive manner.

-

Document

- SOC 3 Report

- ISO 27001 Certification

- ISO 27017 Certificate

- ISO 27018 Certificate

- ISO 9001 Certificate

What is AWS Direct Connect ?

Reduce network costs

- Reduce bandwidth commitment to corporate ISP over public Internet.

- Data transferred over direct connect is billed at a lower rate.

Increase Network Consistency

- Dedicated private connections reduce latency rather then sending the traffic via public routing

Dedicated private network connections to on-premise

- Connect the direct connect connection to VGW in your VPC for a dedicated private connection from on-premise to VPC.

- Use multiple VIF (Virtual Interfaces) to connect to multiple VPCs.

What is Amazon Kinesis ?

- Amazon Kinesis is a platform for streaming data on AWS, offering powerful services to make it easy to load and analyze streaming data, and also providing the ability for you to build custom streaming data applications for specialized needs.

- Web applications, mobile devices, wearables, industrial sensors, and many software applications and services can generate staggering amounts of streaming data – sometimes TBs per hour – that need to be collected, stored, and processed continuously.

Amazon Kinesis Firehose

- Amazon Kinesis Firehose is the easiest way to load streaming data into AWS. It can capture, transform, and load streaming data into Amazon Kinesis Analytics, Amazon S3, Amazon Redshift, and Amazon Elasticsearch Service, enabling near real-time analytics with existing business intelligence tools and dashboards you’re already using today.

Amazon Kinesis Analytics

- Amazon Kinesis Analytics is the easiest way to process streaming data in real time with standard SQL without having to learn new programming languages or processing frameworks. Amazon Kinesis Analytics enables you to create and run SQL queries on streaming data so that you can gain actionable insights and respond to your business and customer needs promptly.

Amazon Kinesis Streams

- Amazon Kinesis Streams enables you to build custom applications that process or analyze streaming data for specialized needs. Amazon Kinesis Streams can continuously capture and store terabytes of data per hour from hundreds of thousands of sources such as website clickstreams, financial transactions, social media feeds, IT logs, and location-tracking events.

To be continued….

sources:@here

Leave a Comment